Part 1: The “definition” of Node

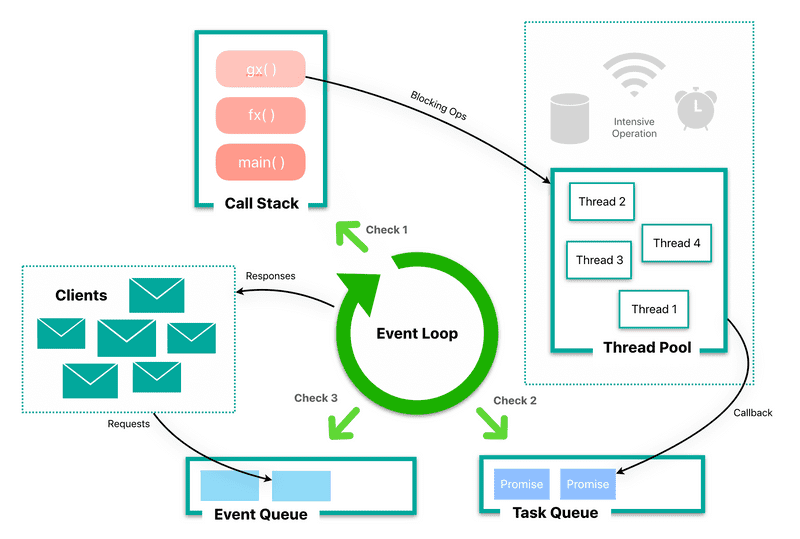

Node is commonly defined as an event-driven, single-threaded, server-side framework. This can be a bit miss leading. Node’s event loop IS single-threaded, but most of the I/O actually works on separate threads! When we send an I/O blocking request, the event loop picks it up and immediately publishes it to a thread pool leaving it ready for the next request.

In a traditional request/response model (IIS/Apache-based servers) when a request comes into the server it is assigned to a thread from a limited pool of threads. This thread will read and process the request. This includes any required blocking I/O operations. It will then prepare a response which is sent back the servers which in turn will send a response back to the respective client. However, if a request comes into the server and all the threads in the pool are in use, the request will be put in a queue where it must wait until a thread opens up.

Node does NOT create a new thread for each request. When it receives a request on its single-threaded event loop, it will determine if the request requires blocking IO operations. If it does, the request is immediately delegated to background workers to do the tasks required. Once completed, a response is sent back to the event loop which in turn responds to the respective client. The non-blocking requests, on the other hand, are immediately processed and responses sent to the respective client.

This is the key to what makes Node ideal for multiple concurrent requests with IO blocking operation; and a poor choice for anything CPU intensive.

Part 2: Taking A deeper dive

In the previous section, we discussed some fairly intensive topics. We’re gonna review the content with more depth and paired with some tangible code example to really see the points covered in action.

To begin, let’s quickly review the difference between synchronous and asynchronous programming. Synchronous programming occurs when we wait for something. For example, if I call to book an appointment and get left on hold. Asynchronous on the other hand occurs when we do not wait; in this case, I am calling and leaving a message. So when we talk about Javascript being asynchronous moving forward it is because we will call a function that has blocking IO operations, we are not waiting until we get the result of that function.

Sound familiar? To start we’re just going to focus on non-blocking events.

When a request comes in, it is actually being placed in a queue knows as the “Event Queue” (Aka message queue or task queue). The “Event Loop” (named literally after an indefinite loop) checks to see if there’s anything in the event queue. When there is, the event loop will dispatch the event to a call stack where the corresponding functions are stacked as frames in a LIFO data structure. In each fame, the execution context is stored; this is the environment where the code will be evaluated (i.e. function will execute in its function context, or global code, main(), executes in the global context). Once a frame is executed it is popped off the stack. Once the entire stack is empty the event loop will dequeue the event, and the next event can then be added to the stack.

I promised to show some code, so here we go! Try to think about what’s happening with the queue and the event loop as each line is executed!

const second = () => {

console.log("in second");

};

const first = () => {

console.log("start of first");

second();

console.log("end of first");

};

first();Let’s see if you got it!

- main() our global context is added to the stack

- first is added on top of the stack

- console.log(“start of first”) is added to the stack, executed, and popped off the stack

- second is added to the top of the stack

- console.log(“in second”) is added to the top of the stack, executed, and popped off the stack

- second is now finished executing and is popped off the stack

- console.log(“end of first”) is pushed to the top of the stack, executed, and popped off

- first is now popped off

- Last but not least! Our global execution context main() is popped off

Pretty simple right? That’s because this code is executed synchronously making it very human-readable. Let add in some blocking I/O now! Again try to think about what might be happening with our stack

const networkRequest = () => {

setTimeout(() => {

console.log("Async Code");

}, 2000);

};

console.log("Start"); //1

networkRequest(); //2

console.log("End.. or is it!"); //3Ready?

-

main() is pushed the stack

-

console.log(“Start”) is pushed on top, executed, and popped off

-

networkRequest() is now pushed on top of the stack

-

setTimeout is now pushed on top of the stack with two arguments, callback and time.

This is where it gets tricky. In synchronous programming, we would wait for the timer to finish before moving on. Instead, what actually happens, is the function starts executing but gets popped off the stack without actually finishing its execution. This was taken care of by Libuv, which is a library that handles the queueing and processing of asynchronous events using the kernel. In other words, it now being executed on another thread!

This is not blocking our main thread, so we move on!

-

networkRequest() is now finished executing and is popped off the stack

-

console.log(“End.. or is it!”) is pushed on top, executed, and popped off

-

main() is pushed on top, executed and popped off

While this occurred our timer function finished executing, so the kernel communicated with node to add the respective callback into a queue, called the “Job Queue” or “Micro-task Queue”.

Our event loop which is constantly checking to see if there’s anything in our call stack now sees that is it empty and proceeds to check our “Job Queue” where it finds our callback!

-

Our setTimeout() callback is added to the stack (with main)

-

console.log(“Async Code”) is pushed on top, executed, and popped off

-

And finally our setTimeout() callback is popped off

To re-cap we have 2 queues; the Event Queue and the Job Queue. The Job queue is where our promises are stored a*nd it has the higher priority *than our Event Queue.

This means the event loop checks

- The call stack

- The Job Queue

- The Event Queue

Repeat!

Let’s look at one last example

console.log("Script start");

setTimeout(() => {

console.log("setTimeout");

}, 0);

new Promise((resolve, reject) => {

resolve("Promise 1 resolved");

}).then(res => console.log(res));

new Promise((resolve, reject) => {

resolve("Promise 2 resolved");

})

.then(res => {

console.log(res);

return new Promise((resolve, reject) => {

resolve("Promise 3 resolved");

});

})

.then(res => console.log(res));

console.log("Script End");The output is as follows:

Script start Script End Promise 1 resolved Promise 2 resolved Promise 3 resolved setTimeout

As you can see, callbacks get executed first!

Part 3: Summary

Let’s quickly recap what happens when our Node server gets a request:

- Clients send a request to our server

- Server internally maintains a limited thread pool to provide services to the client requests.

- Server receives those requests and places them into the “Event Queue”.

- Server's internal "Event Loop" (single-threaded) checks if any client request is placed in the Event Queue.

- If no, then wait for incoming requests for indefinitely.

- If yes, then pick up one client request from Event Queue

- Starts to process that client request

- If that client request **does not** require any blocking IO operations, then process everything, prepare the response and send it back to the client.

- If that Client Request **does** requires some Blocking IO Operations like interacting with Database, File System, External Services then it will follow a different approach

- Checks threads availability from internal thread pool

- Picks up one thread and assign this client request to that thread.

- That thread is responsible for taking that request, process it, perform blocking IO operations, preparing a response and sending it back to the Event Loop

This architecture makes Node the ideal choice for high volume, heavy IO applications, and not so ideal choice for those that require CPU-intensive operation